3.26. VGG16 and ImageNet¶

ImageNet is an image classification and localization competition. VGG16 is a 16-layer network architecture and weights trained on the competition dataset by the Visual Geometry Group (VGG).

In this notebook we explore testing the network on samples images.

3.26.1. Constructing the Network¶

First, we import the conx library:

In [1]:

import conx as cx

Using TensorFlow backend.

Conx, version 3.6.8

We can load in a number of predefined networks, including “VGG16” and “VGG19”:

In [2]:

net = cx.Network.get("vgg16")

WARNING: no such history file '/home/dblank/.keras/models/history.pickle'

We get a warning letting us know that this is a trained model, but no training history exists. That is because this model was trained outside of Conx.

Let’s see what this network looks like:

In [3]:

net.picture(rotate=True)

Out[3]:

Predefined networks have an info method describing the network:

In [4]:

net.info()

Network: VGG16

- Status: compiled

- Layers: 23

This network architecture comes from the paper:

Very Deep Convolutional Networks for Large-Scale Image Recognition by Karen Simonyan and Andrew Zisserman.

Their network was trained on the ImageNet challenge dataset. The dataset contains 32,326 images broken down into 1,000 categories.

The network was trained for 74 epochs on the training data. This typically took 3 to 4 weeks time on a computer with 4 GPUs. This network’s weights were converted from the original Caffe model into Keras.

Sources: * https://arxiv.org/pdf/1409.1556.pdf * http://www.robots.ox.ac.uk/~vgg/research/very_deep/ * http://www.image-net.org/challenges/LSVRC/ * http://image-net.org/challenges/LSVRC/2014/ * http://image-net.org/challenges/LSVRC/2014/browse-synsets

We can see a complete summary of each layer:

In [5]:

net.summary()

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_1 (InputLayer) (None, 224, 224, 3) 0

_________________________________________________________________

block1_conv1 (Conv2D) (None, 224, 224, 64) 1792

_________________________________________________________________

block1_conv2 (Conv2D) (None, 224, 224, 64) 36928

_________________________________________________________________

block1_pool (MaxPooling2D) (None, 112, 112, 64) 0

_________________________________________________________________

block2_conv1 (Conv2D) (None, 112, 112, 128) 73856

_________________________________________________________________

block2_conv2 (Conv2D) (None, 112, 112, 128) 147584

_________________________________________________________________

block2_pool (MaxPooling2D) (None, 56, 56, 128) 0

_________________________________________________________________

block3_conv1 (Conv2D) (None, 56, 56, 256) 295168

_________________________________________________________________

block3_conv2 (Conv2D) (None, 56, 56, 256) 590080

_________________________________________________________________

block3_conv3 (Conv2D) (None, 56, 56, 256) 590080

_________________________________________________________________

block3_pool (MaxPooling2D) (None, 28, 28, 256) 0

_________________________________________________________________

block4_conv1 (Conv2D) (None, 28, 28, 512) 1180160

_________________________________________________________________

block4_conv2 (Conv2D) (None, 28, 28, 512) 2359808

_________________________________________________________________

block4_conv3 (Conv2D) (None, 28, 28, 512) 2359808

_________________________________________________________________

block4_pool (MaxPooling2D) (None, 14, 14, 512) 0

_________________________________________________________________

block5_conv1 (Conv2D) (None, 14, 14, 512) 2359808

_________________________________________________________________

block5_conv2 (Conv2D) (None, 14, 14, 512) 2359808

_________________________________________________________________

block5_conv3 (Conv2D) (None, 14, 14, 512) 2359808

_________________________________________________________________

block5_pool (MaxPooling2D) (None, 7, 7, 512) 0

_________________________________________________________________

flatten (Flatten) (None, 25088) 0

_________________________________________________________________

fc1 (Dense) (None, 4096) 102764544

_________________________________________________________________

fc2 (Dense) (None, 4096) 16781312

_________________________________________________________________

predictions (Dense) (None, 1000) 4097000

=================================================================

Total params: 138,357,544

Trainable params: 138,357,544

Non-trainable params: 0

_________________________________________________________________

This network is huge! 138 million weights!

But why does this have 23 layers when it is described as having 16? The original description only counted layers that have trainable weights. So, that doesn’t include the input layer (1) nor the pooling layers (5) or the flatten layer (1). So:

In [6]:

23 - 1 - 5 - 1

Out[6]:

16

3.26.2. Testing¶

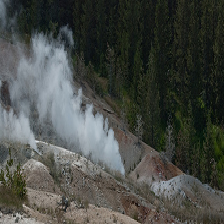

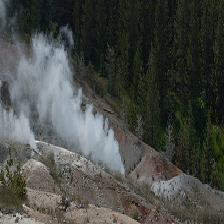

Let’s see what the network can do. Let’s grab an image and propagate it through the network (I’ll show you where this image came from in just a moment).

We download the picture:

In [7]:

cx.download("http://farm4.static.flickr.com/3426/3817878004_7dec9cdfbd.jpg", filename="geyser-1.jpg")

Using cached http://farm4.static.flickr.com/3426/3817878004_7dec9cdfbd.jpg as './geyser-1.jpg'.

In order to propagate it through the network, it needs to be resized to 224 x 224, so we can do that as we open it:

In [8]:

img = cx.image("geyser-1.jpg", resize=(224, 224))

img

Out[8]:

To propagate it through the network it should be a 3D matrix:

In [29]:

array = cx.image_to_array(img)

This network comes with a special preprocess() method for getting the image values into the proper range:

In [30]:

array2 = net.preprocess(array)

And now, we are ready to propagate the array through the network:

In [31]:

output = net.propagate(array2)

What is this output?

In [32]:

len(output)

Out[32]:

1000

We can pretty-format the output to see condensed view of the values:

In [33]:

print(net.pf(output))

[0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.01,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.01,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.01,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.03,0.00,0.09,0.00,0.76, 0.00,0.00,0.00,0.00,0.03,0.01,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00, 0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00,0.00]

This network comes with a special method postprocess() that will take this values and look them up, giving us the category ID, and a nice human-readable label:

In [34]:

net.postprocess(output)

Out[34]:

[('n09288635', 'geyser', 0.7626754641532898),

('n09246464', 'cliff', 0.08688867092132568),

('n09193705', 'alp', 0.03189372643828392),

('n09468604', 'valley', 0.027246791869401932),

('n03160309', 'dam', 0.01493859849870205)]

Indeed, this is a picture of a geyser!

We can see a picture of the entire network, with activations:

In [35]:

net.picture(array2, scale=0.25)

Out[35]:

Why is the first layer gray? Because it is a regular input layer, with 3 channels, and it is showing the first channel.

How does the network represent a picture? It re-represents the picture

at every layer. Many layers are composed of features. For example, we

can look at the first layer, input_1:

In [36]:

net.propagate_to_features("input_1", array2)

Out[36]:

In this example, a feature is a color channel. So feature 0 is Red, feature 1 is Green, and feature 2 is Blue.

We can view all channels together by propagating to the input layer, and then viewing the output as an image:

In [37]:

features = net.propagate_to("input_1", array2) ## this should be identical to array2

cx.array_to_image(features)

Out[37]:

Notice that it looks different from the original:

In [38]:

img

Out[38]:

Why the difference? Remember that the image was preprocessed.

We can also look at the first Convolutional layer, block1_conv1:

In [58]:

net.propagate_to_features("block1_conv1", array2, scale=0.5, cols=10)

Out[58]:

We don’t know what each of the 64 features represents here because it was learned. You might be able to see some patterns though.

We can also look at the last Convolutional layer, block5_pool:

In [61]:

net.propagate_to_features("block5_pool", array2, scale=0.25, cols=10)

Out[61]:

Again, these 512 features were learned.

Now, let’s examine the output and the categories. We recall that the output of the network was:

In [62]:

net.postprocess(output)

Out[62]:

[('n09288635', 'geyser', 0.7626754641532898),

('n09246464', 'cliff', 0.08688867092132568),

('n09193705', 'alp', 0.03189372643828392),

('n09468604', 'valley', 0.027246791869401932),

('n03160309', 'dam', 0.01493859849870205)]

We see that the label “geyser” is category ID “n09288635”. We can see all of the images in this set with the following:

In [63]:

from IPython.display import IFrame

def show_category(category):

return IFrame(src="http://imagenet.stanford.edu/synset?wnid=%s" % category, width="100%", height="500px")

In [64]:

show_category('n09288635')

Out[64]:

Let’s try another image, this time, a picture of a dog. First, I select one from the dog set:

In [65]:

cx.download("http://farm4.static.flickr.com/3657/3415738992_fecc303889.jpg", filename="dog.jpg")

Using cached http://farm4.static.flickr.com/3657/3415738992_fecc303889.jpg as './dog.jpg'.

Open it up as an image in the proper size:

In [66]:

img = cx.image("dog.jpg", resize=(224,224))

img

Out[66]:

Convert to array, and preprocess the array:

In [67]:

array = net.preprocess(cx.image_to_array(img))

And finally, propagate, and postprocess it:

In [68]:

net.postprocess(net.propagate(array))

Out[68]:

[('n02100236', 'German_short-haired_pointer', 0.6061604619026184),

('n02099712', 'Labrador_retriever', 0.17321829497814178),

('n02109047', 'Great_Dane', 0.1112799122929573),

('n02100583', 'vizsla', 0.013886826112866402),

('n02099849', 'Chesapeake_Bay_retriever', 0.011956770904362202)]

Is that a German_short-haired_pointer? Let’s compare:

In [69]:

show_category("n02100236")

Out[69]:

That could be correct. To know for certain, we’d have to know what the target is for that image (or find it in the above pictures). You can download the training set of target categories and image URLs here:

http://image-net.org/download-imageurls

The fall 2011 dataset is 350 MB compressed, and 1.1 GB uncompressed. Searching through this file, we find that this image is actually ‘n00015388_34057’. What is that? Let’s see.

To explore all of the categories, we can download the JSON file describing them:

In [70]:

cx.download("https://s3.amazonaws.com/deep-learning-models/image-models/imagenet_class_index.json")

Using cached https://s3.amazonaws.com/deep-learning-models/image-models/imagenet_class_index.json as './imagenet_class_index.json'.

In [71]:

import json

In [72]:

data = json.load(open("imagenet_class_index.json"))

In [73]:

len(data)

Out[73]:

1000

And here is all 1,000 labels:

In [74]:

print(sorted([label for (category,label) in data.values()]))

['Afghan_hound', 'African_chameleon', 'African_crocodile', 'African_elephant', 'African_grey', 'African_hunting_dog', 'Airedale', 'American_Staffordshire_terrier', 'American_alligator', 'American_black_bear', 'American_chameleon', 'American_coot', 'American_egret', 'American_lobster', 'Angora', 'Appenzeller', 'Arabian_camel', 'Arctic_fox', 'Australian_terrier', 'Band_Aid', 'Bedlington_terrier', 'Bernese_mountain_dog', 'Blenheim_spaniel', 'Border_collie', 'Border_terrier', 'Boston_bull', 'Bouvier_des_Flandres', 'Brabancon_griffon', 'Brittany_spaniel', 'CD_player', 'Cardigan', 'Chesapeake_Bay_retriever', 'Chihuahua', 'Christmas_stocking', 'Crock_Pot', 'Dandie_Dinmont', 'Doberman', 'Dungeness_crab', 'Dutch_oven', 'Egyptian_cat', 'English_foxhound', 'English_setter', 'English_springer', 'EntleBucher', 'Eskimo_dog', 'European_fire_salamander', 'European_gallinule', 'French_bulldog', 'French_horn', 'French_loaf', 'German_shepherd', 'German_short-haired_pointer', 'Gila_monster', 'Gordon_setter', 'Granny_Smith', 'Great_Dane', 'Great_Pyrenees', 'Greater_Swiss_Mountain_dog', 'Ibizan_hound', 'Indian_cobra', 'Indian_elephant', 'Irish_setter', 'Irish_terrier', 'Irish_water_spaniel', 'Irish_wolfhound', 'Italian_greyhound', 'Japanese_spaniel', 'Kerry_blue_terrier', 'Komodo_dragon', 'Labrador_retriever', 'Lakeland_terrier', 'Leonberg', 'Lhasa', 'Loafer', 'Madagascar_cat', 'Maltese_dog', 'Mexican_hairless', 'Model_T', 'Newfoundland', 'Norfolk_terrier', 'Norwegian_elkhound', 'Norwich_terrier', 'Old_English_sheepdog', 'Pekinese', 'Pembroke', 'Persian_cat', 'Petri_dish', 'Polaroid_camera', 'Pomeranian', 'Rhodesian_ridgeback', 'Rottweiler', 'Saint_Bernard', 'Saluki', 'Samoyed', 'Scotch_terrier', 'Scottish_deerhound', 'Sealyham_terrier', 'Shetland_sheepdog', 'Shih-Tzu', 'Siamese_cat', 'Siberian_husky', 'Staffordshire_bullterrier', 'Sussex_spaniel', 'Tibetan_mastiff', 'Tibetan_terrier', 'Walker_hound', 'Weimaraner', 'Welsh_springer_spaniel', 'West_Highland_white_terrier', 'Windsor_tie', 'Yorkshire_terrier', 'abacus', 'abaya', 'academic_gown', 'accordion', 'acorn', 'acorn_squash', 'acoustic_guitar', 'admiral', 'affenpinscher', 'agama', 'agaric', 'aircraft_carrier', 'airliner', 'airship', 'albatross', 'alligator_lizard', 'alp', 'altar', 'ambulance', 'amphibian', 'analog_clock', 'anemone_fish', 'ant', 'apiary', 'apron', 'armadillo', 'artichoke', 'ashcan', 'assault_rifle', 'axolotl', 'baboon', 'backpack', 'badger', 'bagel', 'bakery', 'balance_beam', 'bald_eagle', 'balloon', 'ballplayer', 'ballpoint', 'banana', 'banded_gecko', 'banjo', 'bannister', 'barbell', 'barber_chair', 'barbershop', 'barn', 'barn_spider', 'barometer', 'barracouta', 'barrel', 'barrow', 'baseball', 'basenji', 'basketball', 'basset', 'bassinet', 'bassoon', 'bath_towel', 'bathing_cap', 'bathtub', 'beach_wagon', 'beacon', 'beagle', 'beaker', 'bearskin', 'beaver', 'bee', 'bee_eater', 'beer_bottle', 'beer_glass', 'bell_cote', 'bell_pepper', 'bib', 'bicycle-built-for-two', 'bighorn', 'bikini', 'binder', 'binoculars', 'birdhouse', 'bison', 'bittern', 'black-and-tan_coonhound', 'black-footed_ferret', 'black_and_gold_garden_spider', 'black_grouse', 'black_stork', 'black_swan', 'black_widow', 'bloodhound', 'bluetick', 'boa_constrictor', 'boathouse', 'bobsled', 'bolete', 'bolo_tie', 'bonnet', 'book_jacket', 'bookcase', 'bookshop', 'borzoi', 'bottlecap', 'bow', 'bow_tie', 'box_turtle', 'boxer', 'brain_coral', 'brambling', 'brass', 'brassiere', 'breakwater', 'breastplate', 'briard', 'broccoli', 'broom', 'brown_bear', 'bubble', 'bucket', 'buckeye', 'buckle', 'bulbul', 'bull_mastiff', 'bullet_train', 'bulletproof_vest', 'bullfrog', 'burrito', 'bustard', 'butcher_shop', 'butternut_squash', 'cab', 'cabbage_butterfly', 'cairn', 'caldron', 'can_opener', 'candle', 'cannon', 'canoe', 'capuchin', 'car_mirror', 'car_wheel', 'carbonara', 'cardigan', 'cardoon', 'carousel', "carpenter's_kit", 'carton', 'cash_machine', 'cassette', 'cassette_player', 'castle', 'catamaran', 'cauliflower', 'cello', 'cellular_telephone', 'centipede', 'chain', 'chain_mail', 'chain_saw', 'chainlink_fence', 'chambered_nautilus', 'cheeseburger', 'cheetah', 'chest', 'chickadee', 'chiffonier', 'chime', 'chimpanzee', 'china_cabinet', 'chiton', 'chocolate_sauce', 'chow', 'church', 'cicada', 'cinema', 'cleaver', 'cliff', 'cliff_dwelling', 'cloak', 'clog', 'clumber', 'cock', 'cocker_spaniel', 'cockroach', 'cocktail_shaker', 'coffee_mug', 'coffeepot', 'coho', 'coil', 'collie', 'colobus', 'combination_lock', 'comic_book', 'common_iguana', 'common_newt', 'computer_keyboard', 'conch', 'confectionery', 'consomme', 'container_ship', 'convertible', 'coral_fungus', 'coral_reef', 'corkscrew', 'corn', 'cornet', 'coucal', 'cougar', 'cowboy_boot', 'cowboy_hat', 'coyote', 'cradle', 'crane', 'crane', 'crash_helmet', 'crate', 'crayfish', 'crib', 'cricket', 'croquet_ball', 'crossword_puzzle', 'crutch', 'cucumber', 'cuirass', 'cup', 'curly-coated_retriever', 'custard_apple', 'daisy', 'dalmatian', 'dam', 'damselfly', 'desk', 'desktop_computer', 'dhole', 'dial_telephone', 'diamondback', 'diaper', 'digital_clock', 'digital_watch', 'dingo', 'dining_table', 'dishrag', 'dishwasher', 'disk_brake', 'dock', 'dogsled', 'dome', 'doormat', 'dough', 'dowitcher', 'dragonfly', 'drake', 'drilling_platform', 'drum', 'drumstick', 'dugong', 'dumbbell', 'dung_beetle', 'ear', 'earthstar', 'echidna', 'eel', 'eft', 'eggnog', 'electric_fan', 'electric_guitar', 'electric_locomotive', 'electric_ray', 'entertainment_center', 'envelope', 'espresso', 'espresso_maker', 'face_powder', 'feather_boa', 'fiddler_crab', 'fig', 'file', 'fire_engine', 'fire_screen', 'fireboat', 'flagpole', 'flamingo', 'flat-coated_retriever', 'flatworm', 'flute', 'fly', 'folding_chair', 'football_helmet', 'forklift', 'fountain', 'fountain_pen', 'four-poster', 'fox_squirrel', 'freight_car', 'frilled_lizard', 'frying_pan', 'fur_coat', 'gar', 'garbage_truck', 'garden_spider', 'garter_snake', 'gas_pump', 'gasmask', 'gazelle', 'geyser', 'giant_panda', 'giant_schnauzer', 'gibbon', 'go-kart', 'goblet', 'golden_retriever', 'goldfinch', 'goldfish', 'golf_ball', 'golfcart', 'gondola', 'gong', 'goose', 'gorilla', 'gown', 'grand_piano', 'grasshopper', 'great_grey_owl', 'great_white_shark', 'green_lizard', 'green_mamba', 'green_snake', 'greenhouse', 'grey_fox', 'grey_whale', 'grille', 'grocery_store', 'groenendael', 'groom', 'ground_beetle', 'guacamole', 'guenon', 'guillotine', 'guinea_pig', 'gyromitra', 'hair_slide', 'hair_spray', 'half_track', 'hammer', 'hammerhead', 'hamper', 'hamster', 'hand-held_computer', 'hand_blower', 'handkerchief', 'hard_disc', 'hare', 'harmonica', 'harp', 'hartebeest', 'harvester', 'harvestman', 'hatchet', 'hay', 'head_cabbage', 'hen', 'hen-of-the-woods', 'hermit_crab', 'hip', 'hippopotamus', 'hog', 'hognose_snake', 'holster', 'home_theater', 'honeycomb', 'hook', 'hoopskirt', 'horizontal_bar', 'hornbill', 'horned_viper', 'horse_cart', 'hot_pot', 'hotdog', 'hourglass', 'house_finch', 'howler_monkey', 'hummingbird', 'hyena', 'iPod', 'ibex', 'ice_bear', 'ice_cream', 'ice_lolly', 'impala', 'indigo_bunting', 'indri', 'iron', 'isopod', 'jacamar', "jack-o'-lantern", 'jackfruit', 'jaguar', 'jay', 'jean', 'jeep', 'jellyfish', 'jersey', 'jigsaw_puzzle', 'jinrikisha', 'joystick', 'junco', 'keeshond', 'kelpie', 'killer_whale', 'kimono', 'king_crab', 'king_penguin', 'king_snake', 'kit_fox', 'kite', 'knee_pad', 'knot', 'koala', 'komondor', 'kuvasz', 'lab_coat', 'lacewing', 'ladle', 'ladybug', 'lakeside', 'lampshade', 'langur', 'laptop', 'lawn_mower', 'leaf_beetle', 'leafhopper', 'leatherback_turtle', 'lemon', 'lens_cap', 'leopard', 'lesser_panda', 'letter_opener', 'library', 'lifeboat', 'lighter', 'limousine', 'limpkin', 'liner', 'lion', 'lionfish', 'lipstick', 'little_blue_heron', 'llama', 'loggerhead', 'long-horned_beetle', 'lorikeet', 'lotion', 'loudspeaker', 'loupe', 'lumbermill', 'lycaenid', 'lynx', 'macaque', 'macaw', 'magnetic_compass', 'magpie', 'mailbag', 'mailbox', 'maillot', 'maillot', 'malamute', 'malinois', 'manhole_cover', 'mantis', 'maraca', 'marimba', 'marmoset', 'marmot', 'mashed_potato', 'mask', 'matchstick', 'maypole', 'maze', 'measuring_cup', 'meat_loaf', 'medicine_chest', 'meerkat', 'megalith', 'menu', 'microphone', 'microwave', 'military_uniform', 'milk_can', 'miniature_pinscher', 'miniature_poodle', 'miniature_schnauzer', 'minibus', 'miniskirt', 'minivan', 'mink', 'missile', 'mitten', 'mixing_bowl', 'mobile_home', 'modem', 'monarch', 'monastery', 'mongoose', 'monitor', 'moped', 'mortar', 'mortarboard', 'mosque', 'mosquito_net', 'motor_scooter', 'mountain_bike', 'mountain_tent', 'mouse', 'mousetrap', 'moving_van', 'mud_turtle', 'mushroom', 'muzzle', 'nail', 'neck_brace', 'necklace', 'nematode', 'night_snake', 'nipple', 'notebook', 'obelisk', 'oboe', 'ocarina', 'odometer', 'oil_filter', 'orange', 'orangutan', 'organ', 'oscilloscope', 'ostrich', 'otter', 'otterhound', 'overskirt', 'ox', 'oxcart', 'oxygen_mask', 'oystercatcher', 'packet', 'paddle', 'paddlewheel', 'padlock', 'paintbrush', 'pajama', 'palace', 'panpipe', 'paper_towel', 'papillon', 'parachute', 'parallel_bars', 'park_bench', 'parking_meter', 'partridge', 'passenger_car', 'patas', 'patio', 'pay-phone', 'peacock', 'pedestal', 'pelican', 'pencil_box', 'pencil_sharpener', 'perfume', 'photocopier', 'pick', 'pickelhaube', 'picket_fence', 'pickup', 'pier', 'piggy_bank', 'pill_bottle', 'pillow', 'pineapple', 'ping-pong_ball', 'pinwheel', 'pirate', 'pitcher', 'pizza', 'plane', 'planetarium', 'plastic_bag', 'plate', 'plate_rack', 'platypus', 'plow', 'plunger', 'pole', 'polecat', 'police_van', 'pomegranate', 'poncho', 'pool_table', 'pop_bottle', 'porcupine', 'pot', 'potpie', "potter's_wheel", 'power_drill', 'prairie_chicken', 'prayer_rug', 'pretzel', 'printer', 'prison', 'proboscis_monkey', 'projectile', 'projector', 'promontory', 'ptarmigan', 'puck', 'puffer', 'pug', 'punching_bag', 'purse', 'quail', 'quill', 'quilt', 'racer', 'racket', 'radiator', 'radio', 'radio_telescope', 'rain_barrel', 'ram', 'rapeseed', 'recreational_vehicle', 'red-backed_sandpiper', 'red-breasted_merganser', 'red_fox', 'red_wine', 'red_wolf', 'redbone', 'redshank', 'reel', 'reflex_camera', 'refrigerator', 'remote_control', 'restaurant', 'revolver', 'rhinoceros_beetle', 'rifle', 'ringlet', 'ringneck_snake', 'robin', 'rock_beauty', 'rock_crab', 'rock_python', 'rocking_chair', 'rotisserie', 'rubber_eraser', 'ruddy_turnstone', 'ruffed_grouse', 'rugby_ball', 'rule', 'running_shoe', 'safe', 'safety_pin', 'saltshaker', 'sandal', 'sandbar', 'sarong', 'sax', 'scabbard', 'scale', 'schipperke', 'school_bus', 'schooner', 'scoreboard', 'scorpion', 'screen', 'screw', 'screwdriver', 'scuba_diver', 'sea_anemone', 'sea_cucumber', 'sea_lion', 'sea_slug', 'sea_snake', 'sea_urchin', 'seashore', 'seat_belt', 'sewing_machine', 'shield', 'shoe_shop', 'shoji', 'shopping_basket', 'shopping_cart', 'shovel', 'shower_cap', 'shower_curtain', 'siamang', 'sidewinder', 'silky_terrier', 'ski', 'ski_mask', 'skunk', 'sleeping_bag', 'slide_rule', 'sliding_door', 'slot', 'sloth_bear', 'slug', 'snail', 'snorkel', 'snow_leopard', 'snowmobile', 'snowplow', 'soap_dispenser', 'soccer_ball', 'sock', 'soft-coated_wheaten_terrier', 'solar_dish', 'sombrero', 'sorrel', 'soup_bowl', 'space_bar', 'space_heater', 'space_shuttle', 'spaghetti_squash', 'spatula', 'speedboat', 'spider_monkey', 'spider_web', 'spindle', 'spiny_lobster', 'spoonbill', 'sports_car', 'spotlight', 'spotted_salamander', 'squirrel_monkey', 'stage', 'standard_poodle', 'standard_schnauzer', 'starfish', 'steam_locomotive', 'steel_arch_bridge', 'steel_drum', 'stethoscope', 'stingray', 'stinkhorn', 'stole', 'stone_wall', 'stopwatch', 'stove', 'strainer', 'strawberry', 'street_sign', 'streetcar', 'stretcher', 'studio_couch', 'stupa', 'sturgeon', 'submarine', 'suit', 'sulphur-crested_cockatoo', 'sulphur_butterfly', 'sundial', 'sunglass', 'sunglasses', 'sunscreen', 'suspension_bridge', 'swab', 'sweatshirt', 'swimming_trunks', 'swing', 'switch', 'syringe', 'tabby', 'table_lamp', 'tailed_frog', 'tank', 'tape_player', 'tarantula', 'teapot', 'teddy', 'television', 'tench', 'tennis_ball', 'terrapin', 'thatch', 'theater_curtain', 'thimble', 'three-toed_sloth', 'thresher', 'throne', 'thunder_snake', 'tick', 'tiger', 'tiger_beetle', 'tiger_cat', 'tiger_shark', 'tile_roof', 'timber_wolf', 'titi', 'toaster', 'tobacco_shop', 'toilet_seat', 'toilet_tissue', 'torch', 'totem_pole', 'toucan', 'tow_truck', 'toy_poodle', 'toy_terrier', 'toyshop', 'tractor', 'traffic_light', 'trailer_truck', 'tray', 'tree_frog', 'trench_coat', 'triceratops', 'tricycle', 'trifle', 'trilobite', 'trimaran', 'tripod', 'triumphal_arch', 'trolleybus', 'trombone', 'tub', 'turnstile', 'tusker', 'typewriter_keyboard', 'umbrella', 'unicycle', 'upright', 'vacuum', 'valley', 'vase', 'vault', 'velvet', 'vending_machine', 'vestment', 'viaduct', 'vine_snake', 'violin', 'vizsla', 'volcano', 'volleyball', 'vulture', 'waffle_iron', 'walking_stick', 'wall_clock', 'wallaby', 'wallet', 'wardrobe', 'warplane', 'warthog', 'washbasin', 'washer', 'water_bottle', 'water_buffalo', 'water_jug', 'water_ouzel', 'water_snake', 'water_tower', 'weasel', 'web_site', 'weevil', 'whippet', 'whiptail', 'whiskey_jug', 'whistle', 'white_stork', 'white_wolf', 'wig', 'wild_boar', 'window_screen', 'window_shade', 'wine_bottle', 'wing', 'wire-haired_fox_terrier', 'wok', 'wolf_spider', 'wombat', 'wood_rabbit', 'wooden_spoon', 'wool', 'worm_fence', 'wreck', 'yawl', "yellow_lady's_slipper", 'yurt', 'zebra', 'zucchini']

In [75]:

labels = {label: category for (category,label) in data.values()}

categories = {category:label for (category,label) in data.values()}

If you don’t recognize a label, you can look at the images in that dataset:

In [76]:

category = labels['rock_beauty']

In [77]:

show_category(category)

Out[77]:

Thus, “rock_beauty” is a fish!

If we look up the dog picture’s ID, we see that it is actually classified as “Animal, animate being, beast, brute, creature, fauna”. However, it is not one of the 1,000 categories trained on. So, the network did very well on a novel image.